There is an abundance of information available on the web. Extracting relevant data from websites can be a valuable skill for various purposes, such as market research, data analysis, and automation. In this guide, we will explore how to extract data from websites using Java and the Jsoup library. Specifically, we will be crawling the Worldometers website to retrieve world population data.

with hands-on learning.

get the skills and confidence to land your next move.

What is Jsoup?

Jsoup is a popular open-source Java library that provides a convenient API for extracting and manipulating data from HTML and XML documents. It simplifies the process of web scraping by providing powerful methods to navigate, query, and extract data from HTML structures.

Setting Up Jsoup

To get started, you’ll need to include the Jsoup library in your Java project. You can download the Jsoup JAR file from the official Jsoup website and add it to your project’s dependencies.

Creating Crawler Class

To begin our data extraction journey, let’s start by implementing a crawler class in Java. This class will utilize the Jsoup library, which provides convenient methods for parsing HTML and extracting data from websites. Below is the code for our Crawler class:

import org.jsoup.Connection;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import java.io.IOException;

public class Crawler {

private final Connection connection;

public Crawler(String url) {

this.connection = Jsoup.connect(url);

}

public Document getDocument() throws IOException {

return this.connection.get();

}

static class TablePrinter {

private final Element table;

public TablePrinter(Element table) {

this.table = table;

}

public void print() {

Elements rows = this.table.getElementsByTag("tr");

rows.forEach(row -> {

Elements headerElements = row.getElementsByTag("th");

Elements dataElements = row.getElementsByTag("td");

// Print headers

printElements(headerElements);

// Print table data

printElements(dataElements);

});

}

private void printElements(Elements elements) {

if (!elements.isEmpty()) {

// Print each element with a fixed width of 30

elements.eachText().forEach(elementText -> System.out.printf("%30s\t", elementText));

// Move to a new line after printing a row

System.out.println();

}

}

}

}

Now that we have implemented the Crawler class, let’s explore how to utilize it to extract data from websites. The code snippet below demonstrates how to query the Worldometers website for population data:

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import java.io.IOException;

public class Main {

public static void main(String[] args) {

String url = "https://www.worldometers.info/world-population/population-by-country/";

// Create a new crawler instance

Crawler crawler = new Crawler(url);

try {

// Get the document from the specified URL

Document document = crawler.getDocument();

// Find the table with the given ID

Element table = document.getElementById("example2");

if(table != null) {

// Create a table printer instance

Crawler.TablePrinter tablePrinter = new Crawler.TablePrinter(table);

// Print the table

tablePrinter.print();

}

} catch (IOException exception) {

// Handle any IO errors

System.out.println(exception.getMessage());

}

}

}

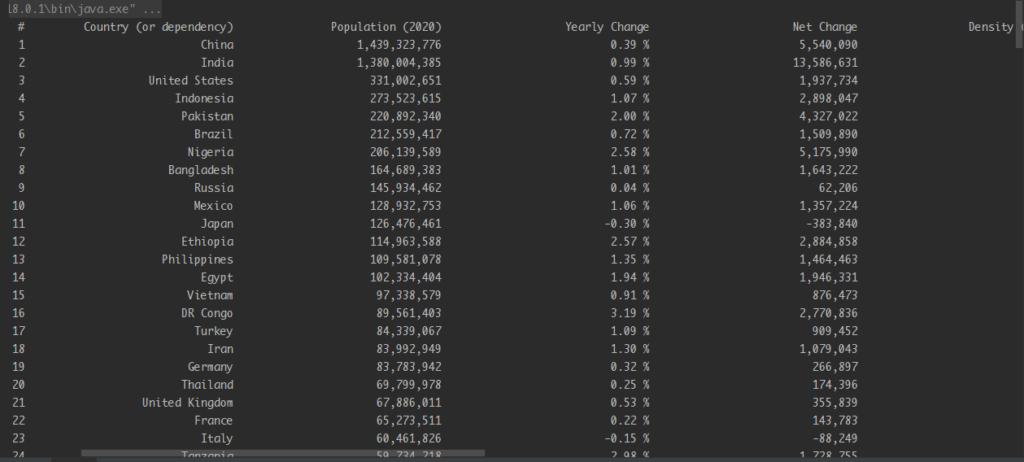

There is more to this code than meets the eye. I highly recommend running the code to see the output it produces. When executed, the code generates output in a tabular format, as depicted in the image below:

That was all I had to share with you guys. If you found this code informative and would love to see more, don’t forget to subscribe to our newsletter! 😊